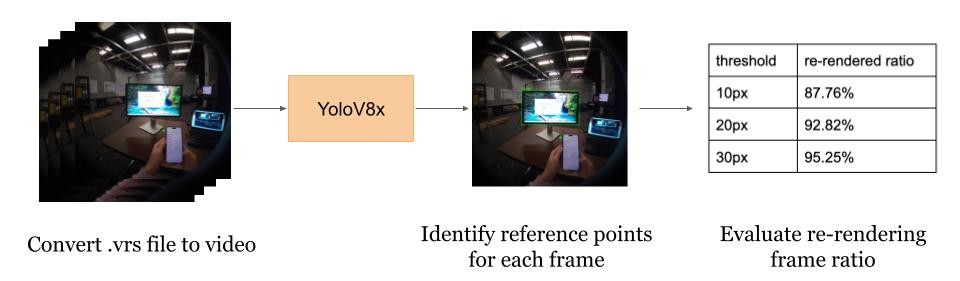

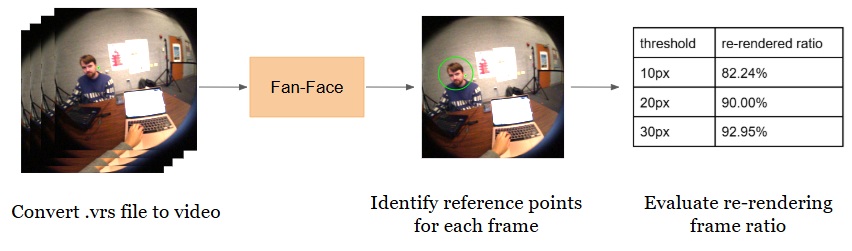

Leveraging Object Detection Model for Power Savings in AR glasses

This project report, in collaboration with Julie (Juhyun) Lee, showcases results from my mentored research in spring of 2025 under Dr. Henry Fuchs (Federico Gil Distinguished Professor). It explores how object and face detection models can be used to reduce redundant rendering in AR glasses, improving power efficiency in real-world productivity scenarios. In the figures above, we gathered real world data in two different office scenarios and input it into our pipeline which output the amount of power savings at different movement thresholds. In one scenario (work on 2 monitors) we were able to project ~95 percent of frames did not need to be re-rendered (only need to be warped) in the video. In the other scenario (Group meeting with 1 monitor) we were able to project ~93 percent of frames did not need to be re-rendered (also can be warped using a small amount of compute).